The new Siri with Apple Intelligence looks like a huge leap forward — but it's missing one thing

Much improved, but it could be even better

Apple’s embarking on a new journey, one that could dramatically transform the way we interact with our iPhones. At WWDC 2024, the company unveiled its grand plans with Apple Intelligence, which will power many of the new Siri experiences coming to iOS 18, iPadOS 18, and macOS Sequoia.

One of the more impressive new Siri features shown off that caught my attention is in-app actions. This effectively puts Siri in control of more of the iPhone apps we love, including third-party ones through the App Intents API that Apple’s rolling out to developers. All of the demos of Siri’s new abilities were nothing short of impressive, but it’s missing one feature that I think exemplifies the true potential of artificial intelligence.

I’m referring to how Siri, even with its enhanced Apple Intelligence functionality, lacks the ability to proactively take actions — without requiring my intervention. I think this is the one feature that could really take Apple’s AI ambitions to the next level. Here’s why.

The AI missing link

I’ve exhaustively mentioned how I’ve been using AI features on my phones, helping to streamline tasks that would otherwise be time consuming for me to do the old fashion way. From the Galaxy S24 Ultra’s ability to turn any video into slow motion with the help of generative AI, to Google Assistant taking phone calls for me, AI features in today’s best phones save me a tremendous amount of time — and for that I’m grateful.

However, it’s tough for me to believe these are really powered by artificial intelligence. Why’s that? Well, the AI features I’ve come to rely on all have the same thing in common — actions that require my interaction. The missing link here is the ability for a voice assistant such as Siri, to perform actions all on its own.

Apple’s Tim Cook detailed the company’s plan for Apple Intelligence. “Our unique approach combines generative AI with a user’s personal context to deliver truly helpful intelligence,” said Cook. I’m all for helpful intelligence, but it’s lacking the awareness I crave with real artificial intelligence.

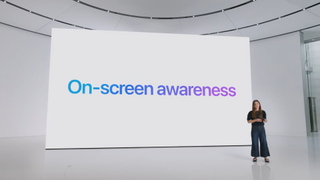

Intelligence that’s aware

What I'm referring to here is the ability to be aware of what's going on and make proactive suggestions or actions based on them. From all the demos, it’s apparent that many of the Apple Intelligence features are reactive to commands that I would have to initiate — rather than Siri doing them on its own.

Take, for example the common situation when you’re running late for a meeting. If you’re driving and can’t access your phone, it would be impressive if the artificial intelligence in a phone were smart enough to know that you’re running late and somehow inform them. I would appreciate it if AI could detect that and automatically message those in the meeting invite to let them know that I’m running late and my expected time of arrival (based on my phone’s GPS coordinates).

Here are just several other example of how Siri could take proactive actions:

- Get a reminder to bring an umbrella automatically before leaving the house based on the forecast.

- Siri could activate your bus ticket in a transit app once you’ve arrived at your usual pickup location.

- Acting as a watchful eye on HomeKit enabled security cameras to notify you about emergencies and possible intrusions.

- Knowing where to pick up where you left off watching a movie on your iPhone, then proceeding to play it on your Apple TV.

- Turning on the lights at home as you're nearing.

- Reminding you to make mortgage or rental payments based on your previous history.

Apple Intelligence isn’t there yet, especially if Siri on my iPhone goes ahead to take on those actions. At the very least, I would love to get a suggestion from Siri to do this. From the looks of it, this could be possible with AI agents that act on your behalf — while also learning and improving itself. That’s an important concept because I think it’s fundamental at dividing the line between features that run based on commands as opposed to doing it all on its own through trial and error.

I suspect that it'll only be a matter of time before we really get this kind of artificial intelligence. It’s one thing to be aware of what I’m saying and the apps I’m using, but it’s another to take action through self-awareness. Perception is the key to unlocking the true power of artificial intelligence, something that’s lacking in the current iterations of what companies such as Apple market as AI.

More from Tom's Guide

Sign up to get the BEST of Tom's Guide direct to your inbox.

Get instant access to breaking news, the hottest reviews, great deals and helpful tips.

John’s a senior editor covering phones for Tom’s Guide. He’s no stranger in this area having covered mobile phones and gadgets since 2008 when he started his career. On top of his editor duties, he’s a seasoned videographer being in front and behind the camera producing YouTube videos. Previously, he held editor roles with PhoneArena, Android Authority, Digital Trends, and SPY. Outside of tech, he enjoys producing mini documentaries and fun social clips for small businesses, enjoying the beach life at the Jersey Shore, and recently becoming a first time homeowner.